Train Fingers Classification Model Using NVidia DIGITS

NVidia DIGITS is a simple and useful integration of deep learning framework Caffe into the cloud settings. I’ll use it for my pet project of fingers recognition trained end-to-end. Resulting model can be easily loaded to a mobile robot powered on Jetson TX2 but let’s leave it for another post.

Introduction

Mobile robots need to sense the world around and reason about it. Different sensors convey different modality on which we are building perception, control, localization, mapping and behavioral planning. Visual perception is the key to a robot perception system due to high information density that images contain. Though images were always difficult to process both algorithmically and computationally. With the advances in deep learning and GPU computing chips, we can easily build end-to-end systems for a specific task with the fraction of efforts that we needed a decade ago.

Gestures is a natural human interaction method that we can apply for mobile robot control. Different systems of hand-gestures mobile robots control was proposed: image pre-processing and feature of extraction state [1], wearable inertial-sensor-based system [3], system based on hidden markov models [4], or neural networks EOG gesture recognition [5].

Here I am exploring the end-to-end system for gesture recognition that was trained on a manually collected dataset and using an NVidia DIGITS for training.

Data Acquisition and Pre-processing

Data was manually collected using a MacBook Pro built-in camera and a python script grab_images.py. All script sources are available on my Github repo bexcite/fingers_classification.

python grab_images.py --size 28 --rate 10 --gray

It captures frames at 10 Hz rate, resizes it to 28x28 (resize), and saves in a grayscale gray.

Different lighting conditions, backgrounds variations, angles of the gestures and spatial rotations were used to cover the broad spectrum of possible uses.

Additional data augmentation was used to flip images horizontally to additionally increase the dataset and variations. (source)

python augment_images.py --input_dir <data> --output_dir <aug_data> --resize 28

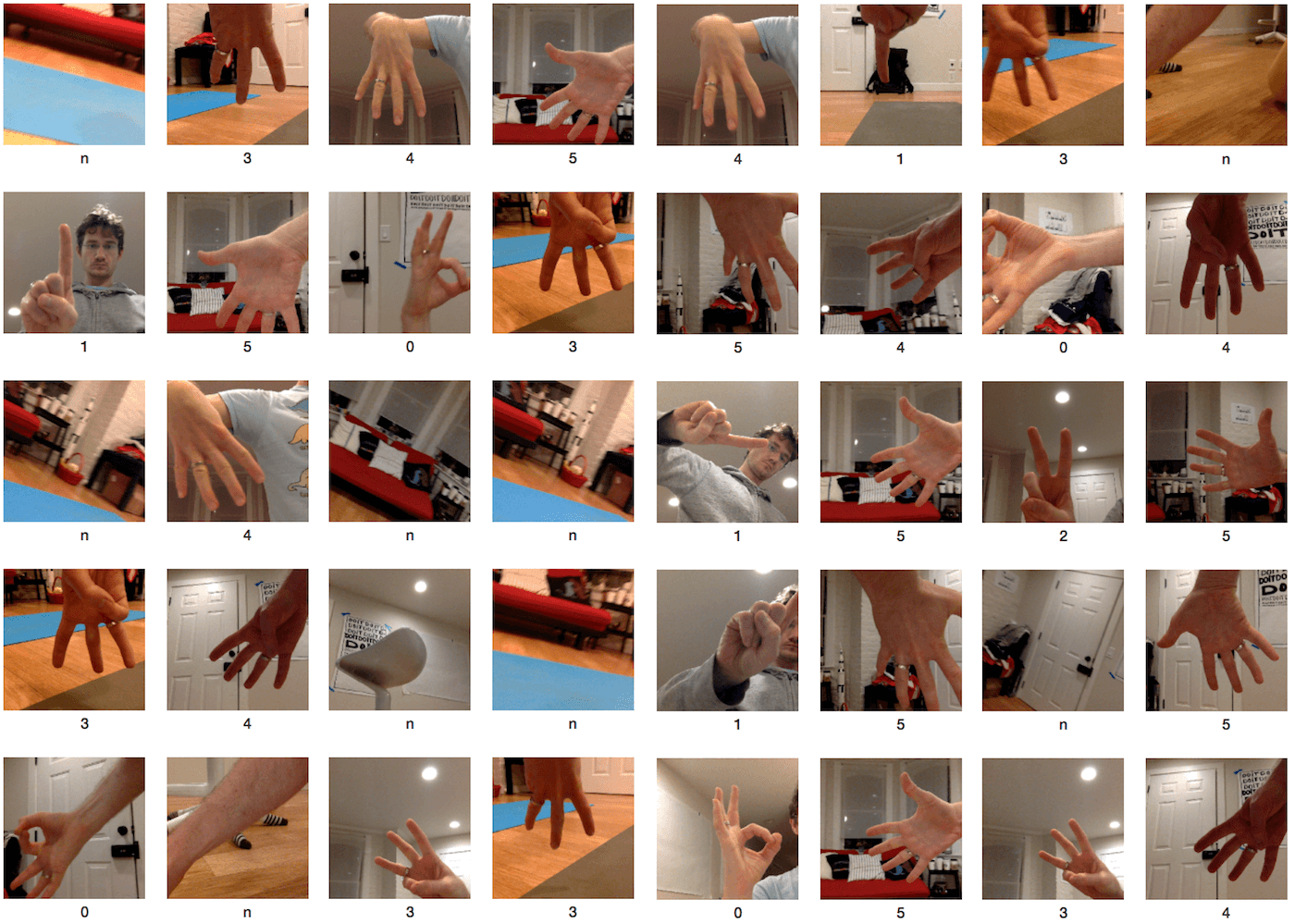

Below is an example of images collected before resizing.

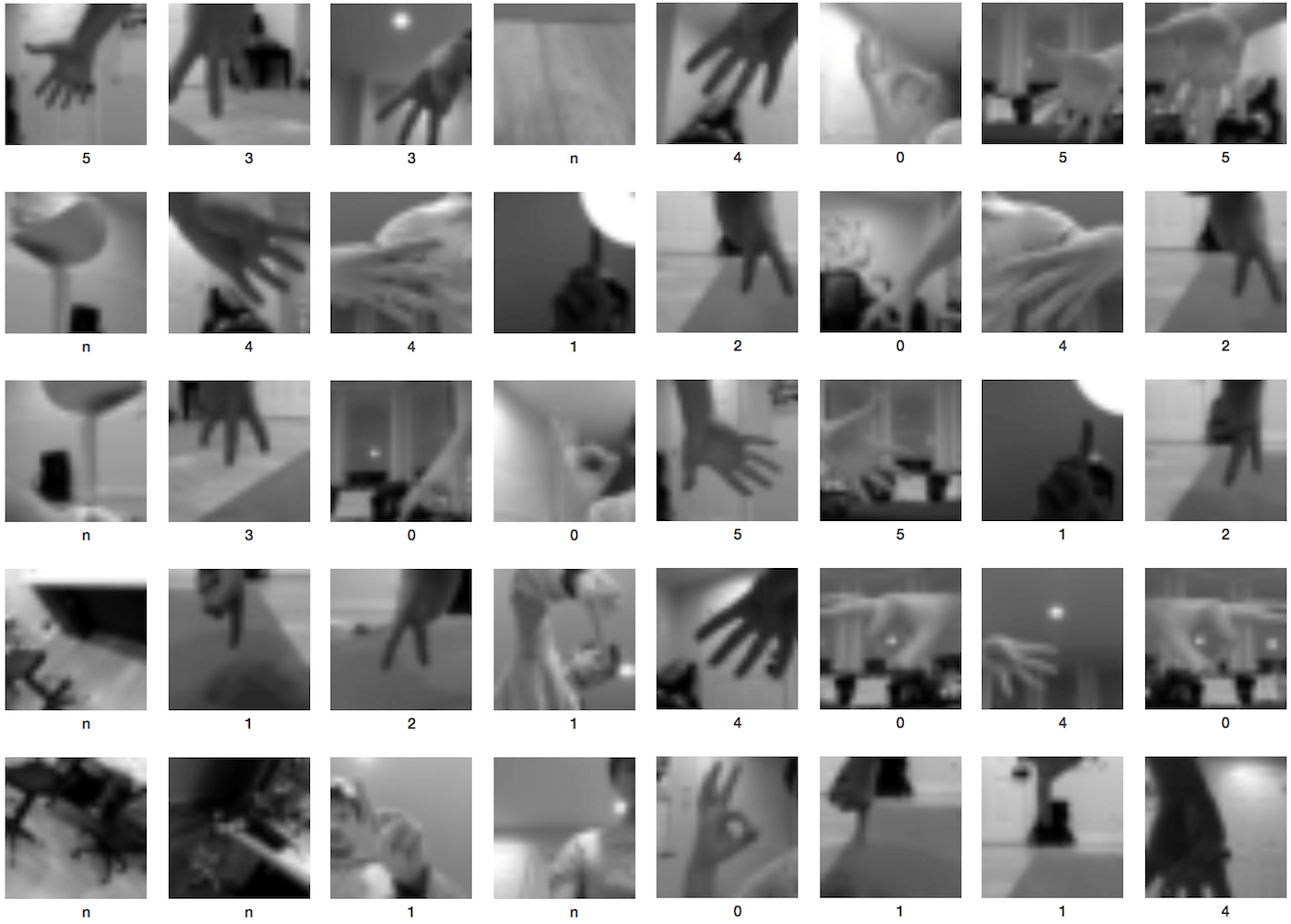

Examples of processed images that were used during the training (scaled for the better view).

Formulation and Model Training

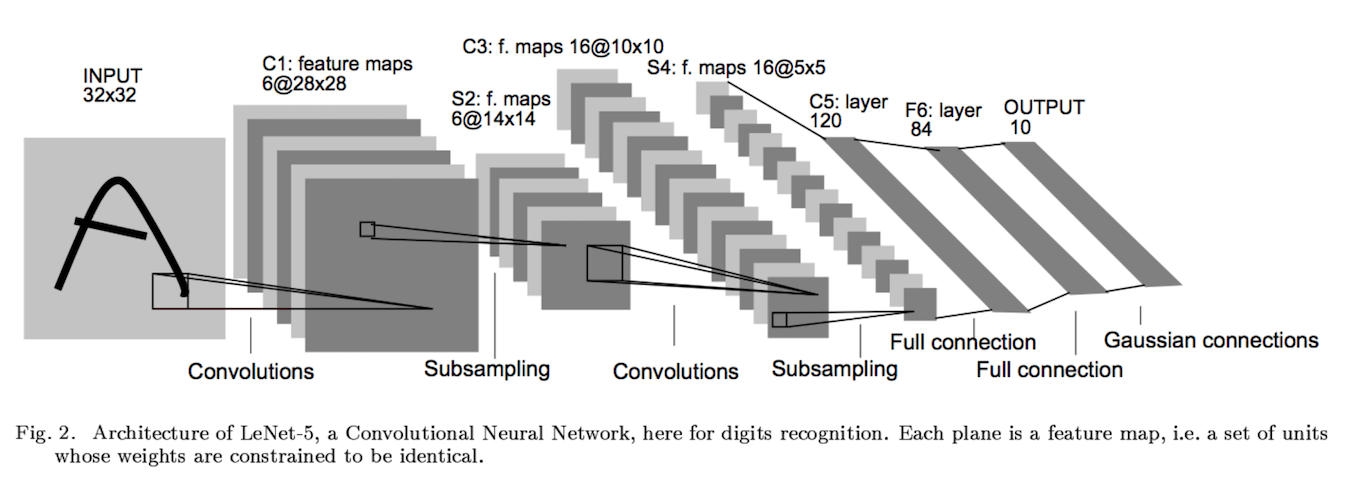

Fingers recognition for the mobile robot should be as fast as possible and don’t take computer time from control and localization pipelines. I’ve tested two ready-made networks in DIGITS, LeNet and GoogLeNet, with the fastest results and good accuracy achieved on a standard LeNet convolutional network with gray images of size 28x28 pixels.

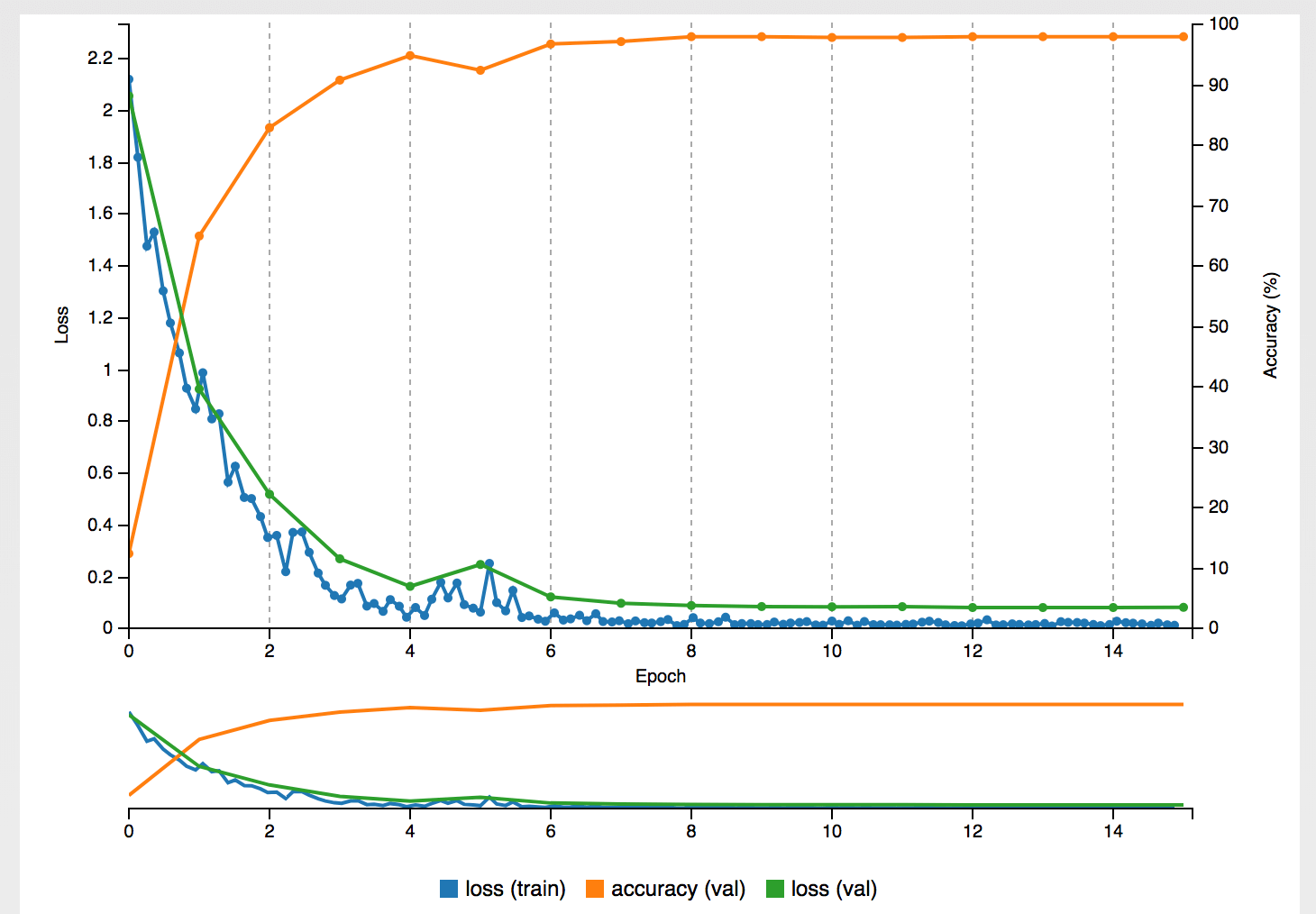

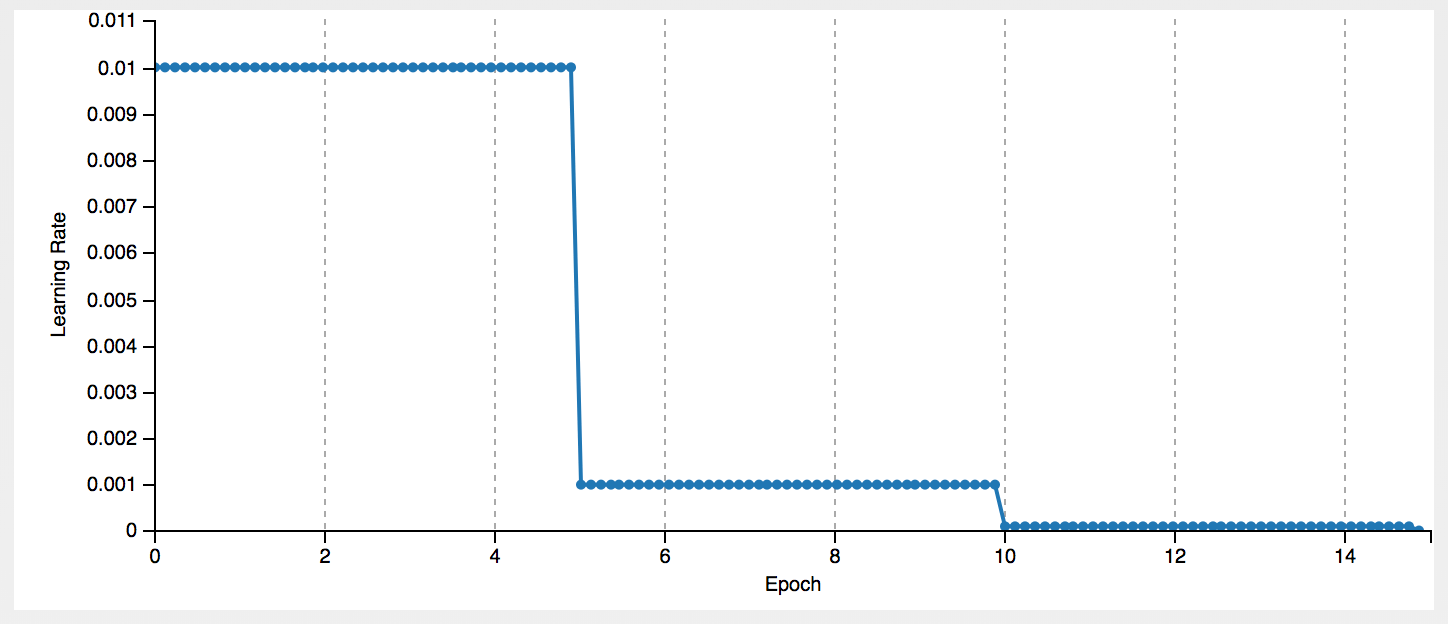

Network converged after around 10 epochs in a matter of seconds.

It was trained using SGD with the starting learning rate 0.01 and a scheduled decrease.

Results

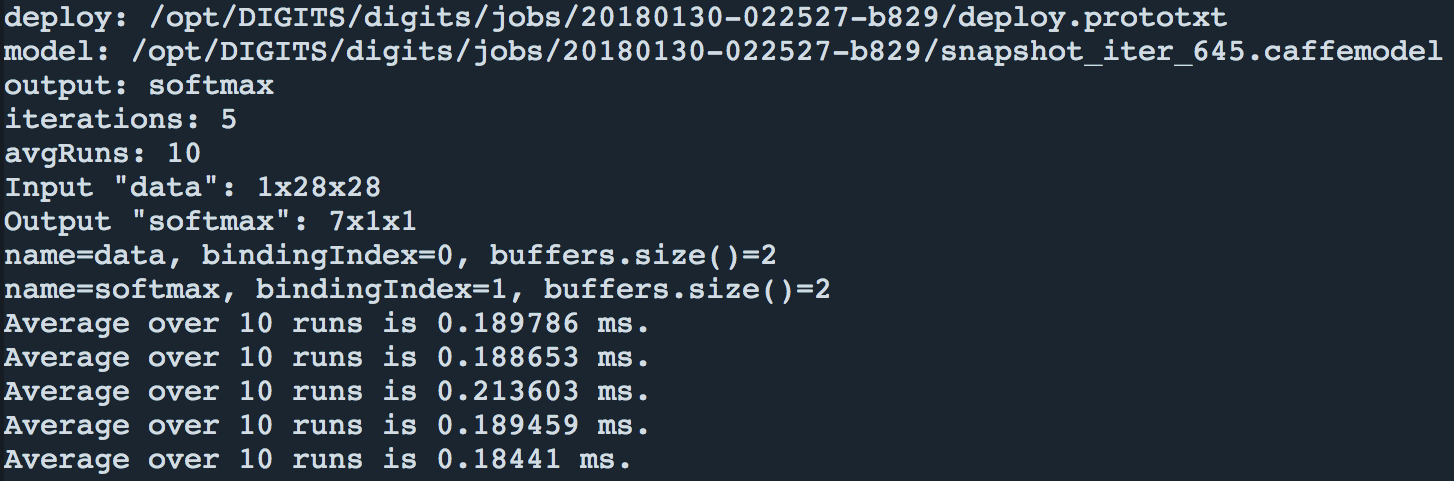

Fingers dataset trained on LeNet network showed the good accuracy for all classes and inference speed 0.19ms on a Tesla K80 GPU.

Below is the result output from TensorRT for inference speed evaluation:

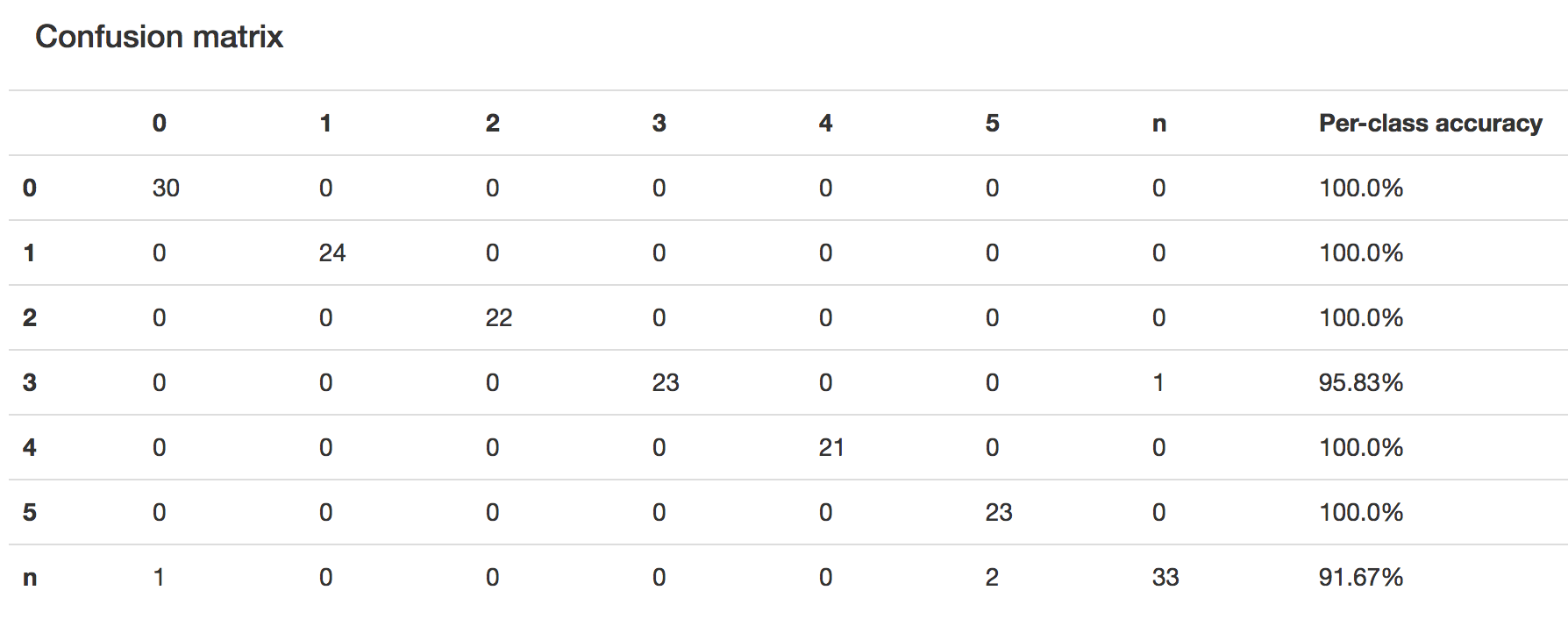

Confusion matrix for a test dataset:

Discussion

It was unexpected that LeNet and 28x28 gray images of 6 possible combinations of fingers gesture could produce acceptable results. Though if we look closer into 28x28 images of training dataset, it becomes clear that it’s possible to recognize the depicted gesture and amount of fingers in almost all images so convolutional layers were able to extract the meaningful features too.

Next

Next I’m planning to load this network on Jetson TX2 and see how it performs in real-time on smaller hardware so eventually my robot could recognize hand gesture commands while navigating around an apartment.

References

-

Ali, Muaammar Hadi Kuzman, M. Asyraf Azman, and Zool Hilmi Ismail. “Real-time hand gestures system for mobile robots control.” Procedia Engineering 41 (2012): 798-804. link >

-

Manigandan, Mr, and I. Manju Jackin. “Wireless vision based mobile robot control using hand gesture recognition through perceptual color space.” Advances in Computer Engineering (ACE), 2010 International Conference on. IEEE, 2010. link >

-

Stančić, Ivo, Josip Musić, and Tamara Grujić. “Gesture recognition system for real-time mobile robot control based on inertial sensors and motion strings.” Engineering Applications of Artificial Intelligence 66 (2017): 33-48. link >

-

Iba, Soshi, et al. “An architecture for gesture-based control of mobile robots.” Intelligent Robots and Systems, 1999. IROS’99. Proceedings. 1999 IEEE/RSJ International Conference on. Vol. 2. IEEE, 1999. link >

-

SASAKI, Minoru, and Yanagido Gifu. “Mobile Robot Control by Neural Networks EOG Gesture Recognition.” link >

-

Sabour, Sara, Nicholas Frosst, and Geoffrey E. Hinton. “Dynamic routing between capsules.” Advances in Neural Information Processing Systems. 2017. link >

-

LeCun, Yann, et al. “Gradient-based learning applied to document recognition.” Proceedings of the IEEE 86.11 (1998): 2278-2324. link >